The AI Race: Are We Witnessing Genuine Reasoning or Just Meta-Mimicry?

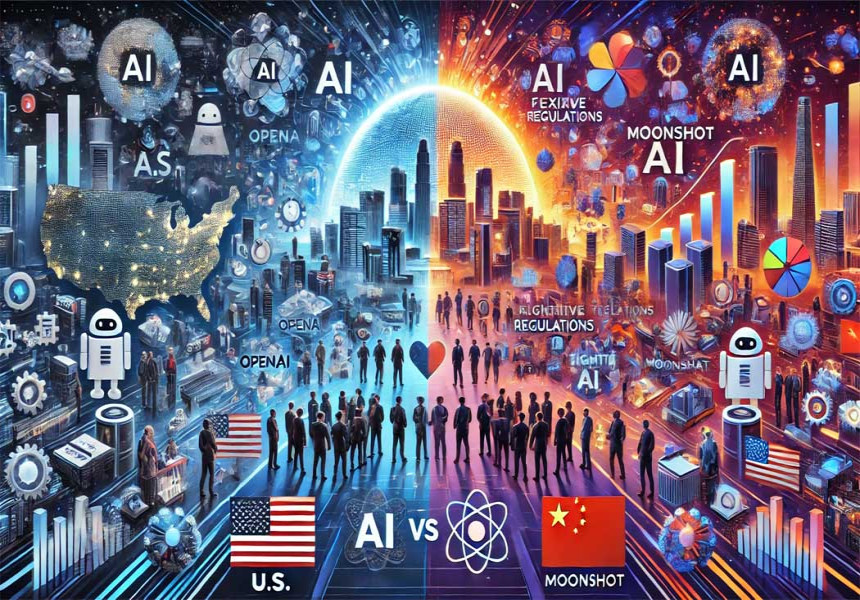

The world of artificial intelligence (AI) is evolving at a pace so rapid that it can feel like a blur, with new models and breakthroughs emerging on an almost daily basis. OpenAI launches one, DeepSeek follows up with another, and OpenAI responds with yet another iteration. While each of these announcements garners attention, focusing too much on any one of them risks missing the bigger, more profound story unfolding in the AI industry.

The true headline of the past six months isn’t just about new products or functionalities—it’s the bold claim made by AI companies that their models are now capable of genuine reasoning, akin to the way humans approach problem-solving. This claim has profound implications for everything from everyday consumers to global governments, as it raises the question: Is this claim actually true?

The Big Question: Can AI Reason?

If you’ve interacted with ChatGPT or similar models, you may have noticed that they are great at quickly spitting out answers to straightforward questions. However, newer AI models, like OpenAI’s o1 and DeepSeek’s r1, promise something more. These models claim to engage in "chain-of-thought reasoning," where they break down complex problems into smaller, more manageable pieces and solve them step by step—much like how humans would.

The results so far have been impressive. These models can ace difficult logic puzzles, solve math problems, and even write flawless code on the first try. Yet, they still stumble on relatively simple issues. This paradox leaves experts divided: some argue that these models are indeed reasoning, while others believe they are merely mimicking the process without true understanding.

What is "Reasoning"?

Before we dive into whether AI can genuinely reason, it’s important to define what "reasoning" actually means. In the AI context, companies like OpenAI describe reasoning as the process of breaking down a problem into smaller tasks and working through them logically, which is clearly an important feature when dealing with complex problems.

However, this narrow view of reasoning may not fully capture the breadth of what human reasoning entails. In humans, reasoning can take many forms—deductive (drawing specific conclusions from general principles), inductive (generalizing from specific observations), analogical (drawing parallels between similar situations), and causal reasoning (understanding cause and effect), among others.

For a human, solving a complex problem usually involves stepping back, breaking the problem into manageable parts, and carefully thinking through the steps. While chain-of-thought reasoning is certainly helpful, it’s far from the only kind of reasoning humans use. One of the most important aspects of human reasoning is our ability to generalize—identifying patterns or rules from limited data and applying them to new, unseen situations.

Can AI models do this? That’s the big question at the heart of the debate.

The Skeptic's View: AI Is Just Mimicking, Not Reasoning

Many AI experts remain skeptical of the claim that models like o1 are truly capable of reasoning. Shannon Vallor, a philosopher of technology, argues that these models are simply engaging in what she calls "meta-mimicry." Essentially, she believes these models aren’t really reasoning at all; they are mimicking the processes humans use to reason, which they have learned from their vast datasets.

For Vallor, this is no different from earlier models, like ChatGPT, which simply generate responses based on patterns they've learned in training data. These models might give the illusion of reasoning, but they are not actually breaking down a problem and thinking through it in a human-like way. Instead, they rely on heuristics—mental shortcuts that can lead to correct answers, but not by truly understanding the problem.

This theory is supported by the fact that some AI models, like o1, still fail on simple questions, which suggests they might not be doing any real reasoning at all. If these models are still making mistakes on basic tasks, Vallor asks, why should we assume that they’re fundamentally different from previous models?

The Believer’s Case: AI Models Are Reasoning, But Differently

On the other side of the debate, many experts are more optimistic. Ryan Greenblatt, chief scientist at Redwood Research, argues that these models are indeed doing something resembling reasoning. While they may not generalize as flexibly as humans, and their reasoning processes may be more rigid, they are still working through problems in a way that goes beyond simple pattern recognition.

Greenblatt draws an interesting analogy: Consider a physics student who has memorized 500 equations. When faced with a problem, the student applies the right equation based on the situation. This process is not as intuitive as a true expert’s, but it’s still reasoning—it’s just more mechanical and less flexible than human reasoning.

In the case of AI, these models don’t just memorize a handful of solutions; they’ve absorbed vast amounts of information and apply it to problems in ways that appear reasoned. The result is that they can solve problems they’ve never explicitly encountered before, which suggests they are reasoning, albeit in a more rigid and less adaptable manner than humans.

The Middle Ground: A Spectrum of Reasoning

The truth, it seems, lies somewhere between these two extremes. AI models are not simply mimicking human reasoning; they are engaging in a form of reasoning, but one that is far more constrained and reliant on memorization than human thinking.

A concept called "jagged intelligence" has emerged to describe this phenomenon. As computer scientist Andrej Karpathy explains, AI models exhibit intelligence that is full of peaks and valleys—they can perform incredibly well on some tasks (like solving complex math problems) while simultaneously struggling with others that might seem very similar to a human.

AI’s performance isn’t uniformly impressive across all tasks; instead, it exhibits strengths in some areas and weaknesses in others. This jagged pattern of performance is in stark contrast to human intelligence, which tends to be more consistent across various problem-solving tasks

What Does This Mean for the Future?

So, is AI truly reasoning? The answer isn’t a simple yes or no. As Ajeya Cotra, a senior analyst at Open Philanthropy, puts it, AI models are somewhere between pure memorization and true reasoning. They are, in effect, “diligent students” who rely heavily on memorized knowledge and heuristics but also engage in some form of reasoning to apply that knowledge to new situations.

This recognition of AI’s "jagged intelligence" suggests that the key to understanding AI’s capabilities isn’t about comparing it to human intelligence. Instead, we need to appreciate it as something different—powerful in its own way, but also deeply flawed in others.

For now, the best way to use AI is to recognize what it’s good at and what it’s not. AI excels in tasks where there is a clear, objective solution that can be checked—like writing code or generating website templates. In these domains, AI can be a powerful tool. But when it comes to complex judgment calls or situations where there is no clear right answer, such as moral dilemmas or personal advice, we should be more cautious.

In the future, as AI continues to develop, we may reach a point where these systems can truly rival human reasoning. Until then, it’s crucial to use AI as a tool—one that can augment human capabilities, but not replace human judgment.